Planning an evaluation

Step by step

June 2020

Download Practice guide

About this guide

This resource is for people who are new to evaluation and need some help with developing an evaluation plan for a program, project or service for children and families. This guide and the accompanying templates will help you to clarify the purpose of your evaluation, select appropriate methods and have a clear plan and timeline for data collection and analysis. This resource is primarily designed for people who are going to conduct an evaluation themselves or who are managing an in-house evaluation but it can also be useful for people who are preparing to engage an external evaluator.

Although this resource is designed for beginners, it assumes some basic knowledge of evaluation. If you are completely new to evaluation and want to know what an 'evaluation' is and what some of the basic terms used in evaluation mean, or if you just want a refresher on the basics, you could start with this webinar or this introductory resource.

We suggest that you take the time to plan your evaluation in detail before you begin. Although this takes some additional work and time at first, it will save you time later and it increases the likelihood that you will have a high-quality evaluation that runs on time and on budget.

We also recommend consulting with staff and other stakeholders in the development of your evaluation plan. This will ensure that you get different perspectives and that everyone is on board and willing to do the work of evaluation. Developing an evaluation plan collaboratively is particularly important if you are working in partnership with community members or with another service.

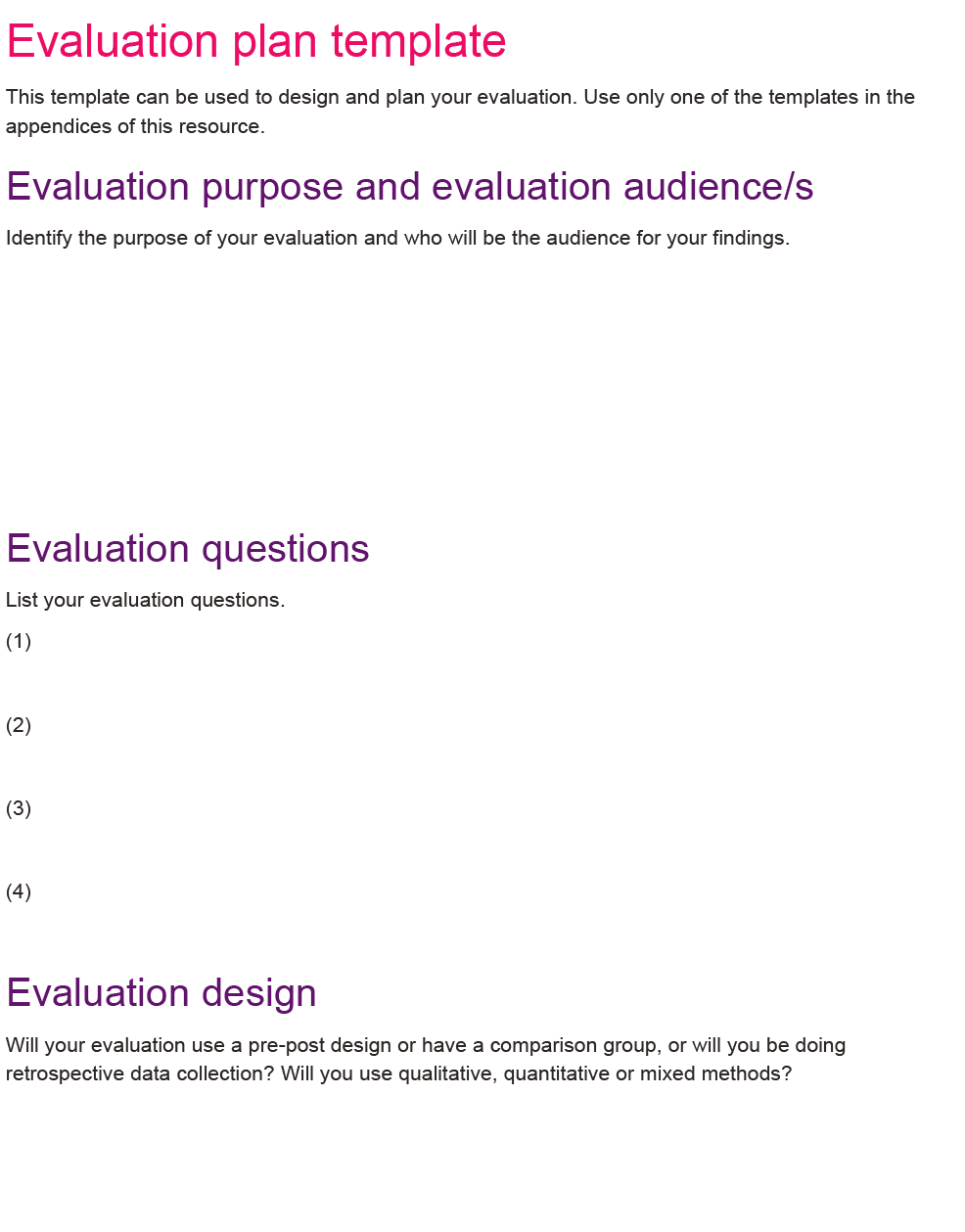

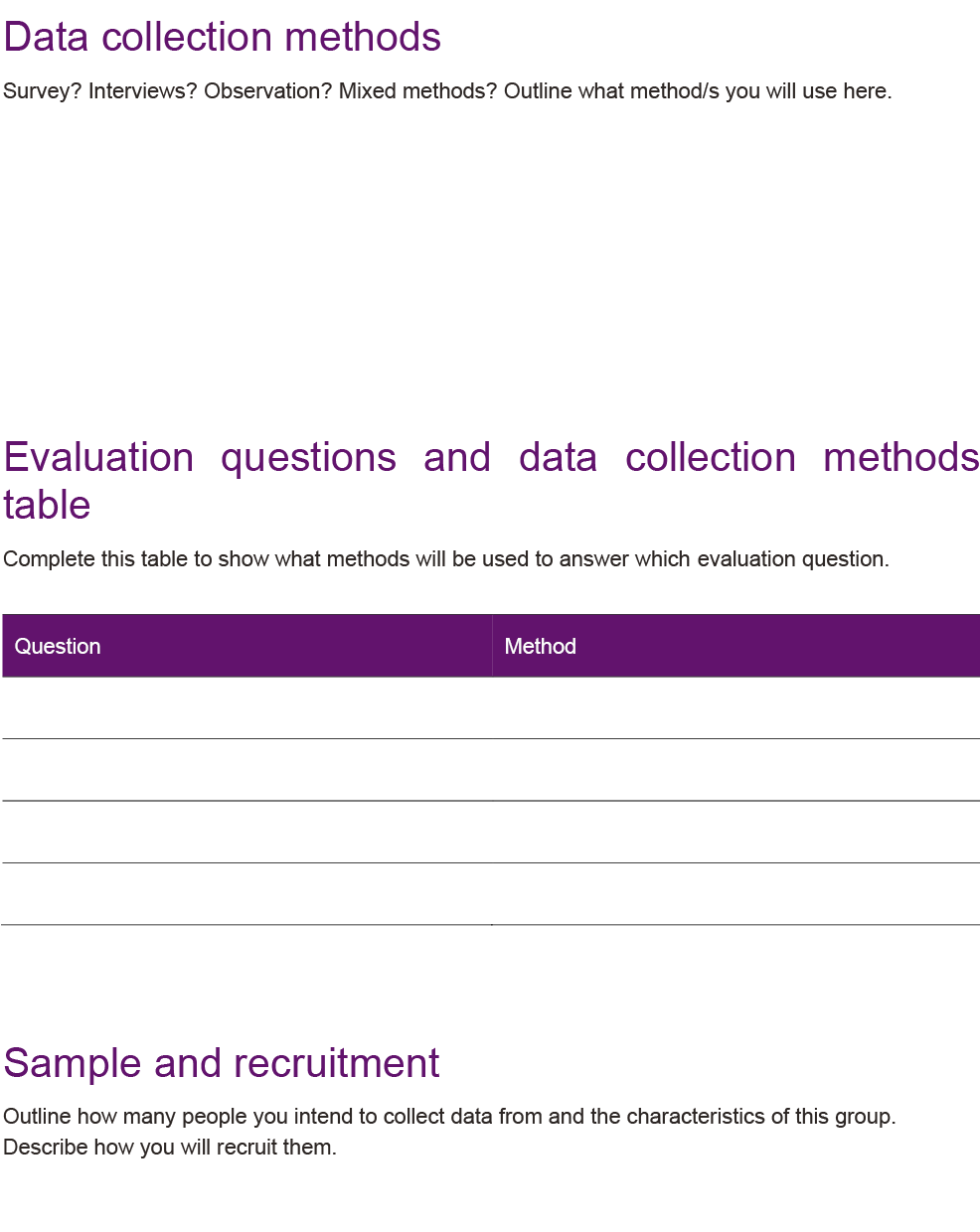

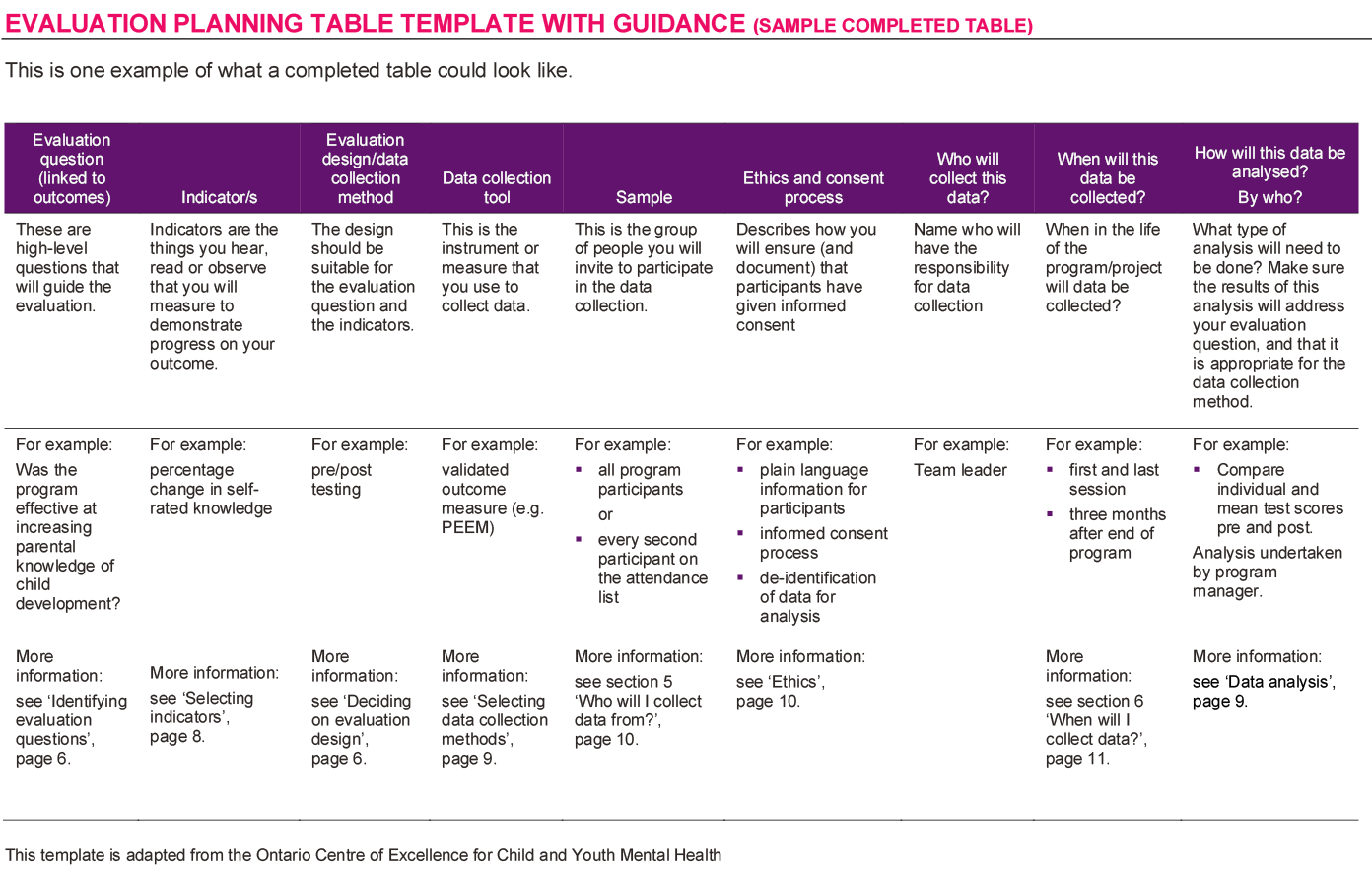

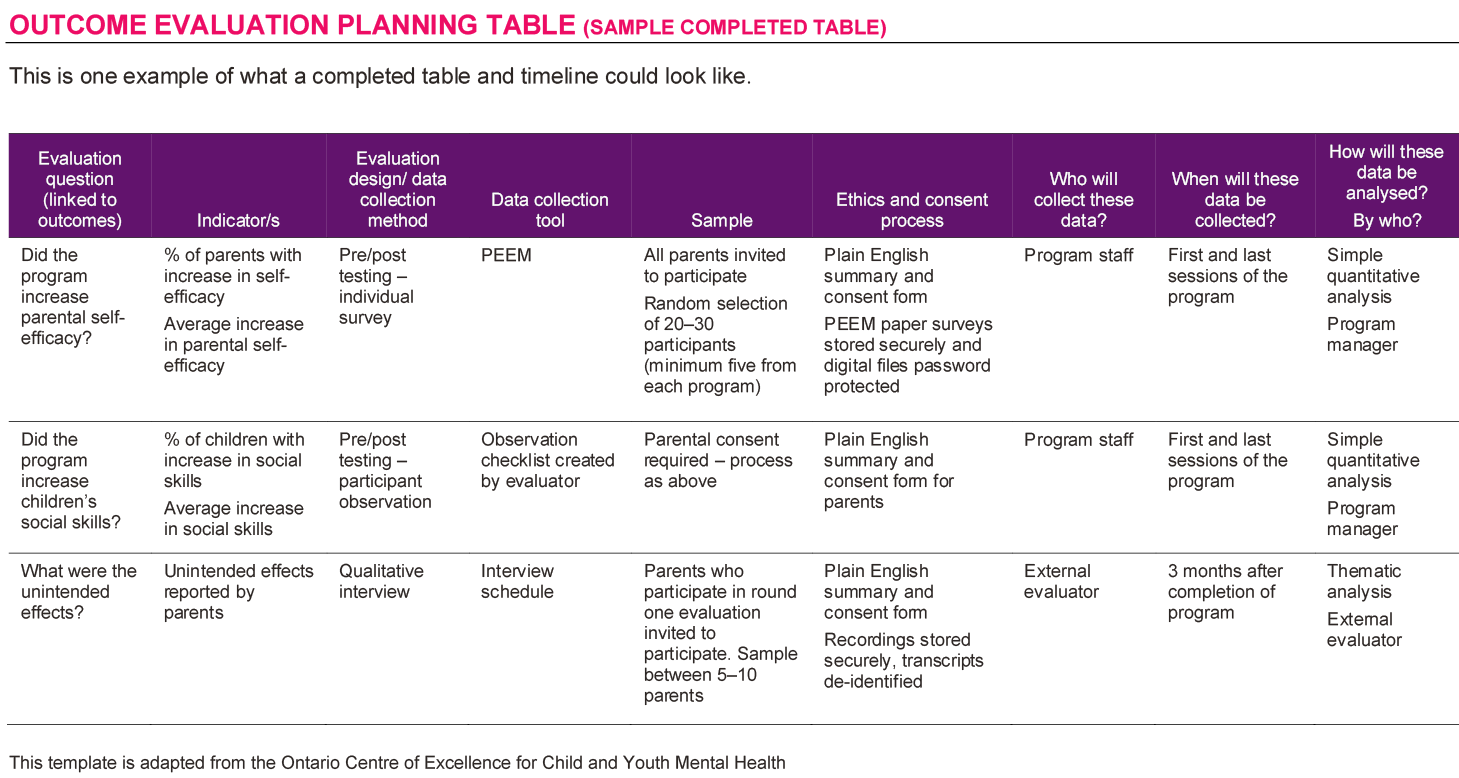

This resource has been designed to step you through the different stages of an evaluation. Although these planning stages are presented here as a linear sequence, in reality you will need to go back and forth between the different sections as you plan your evaluation. This resource includes two templates: a simple evaluation planning table (Appendix A) and a slightly more comprehensive evaluation planning template (Appendix B). You can choose between either of these templates to design and plan your evaluation. We suggest using the evaluation planning template in Appendix B as it is more comprehensive; however, many people prefer the simplicity of the planning table in Appendix A, so we have included both options. You can use whichever one you prefer, and you don't need to use both. Appendix C provides an example of a completed template.

Developing an evaluation plan

An evaluation plan is used to outline what you want to evaluate, what information you need to collect, who will collect it and how it will be done. If you start an evaluation without a plan, you risk collecting data that is not useful and doesn't help answer your questions, and your evaluation is more likely to run over time and over budget. Planning your evaluation carefully will help ensure that your evaluation collects the data that you need and runs on time. Ideally, you will plan your evaluation when you are planning your program. But you can still plan and undertake an evaluation if a program is already underway.

Before developing an evaluation plan, you need to have a clear idea of what your program intends to do (its goals, objectives or outcomes) and how it intends to do it (strategies or activities). A program logic model can help with this.

The diagram in Figure 1 breaks evaluation planning down into seven questions, with some key tasks for each question.

Figure 1: Key steps in an evaluation

Why do I need to evaluate?

Why do I need to evaluate?

Identifying evaluation purpose and audience

The essential first step in an evaluation is identifying the purpose of the evaluation and who the main audience will be. This will help determine the type of evaluation you do and the data you collect. The purpose of an evaluation is often linked to the main intended audience. The audience could be your funders, program staff, managers who make decisions about the future of programs, or it could be community members who have been involved in the program.

Each of these groups might want to know different things about the program. For example, program staff and managers may want to use the evaluation to make program improvements and so they might want to know if the program is reaching the right people, and if it is being delivered as intended. In contrast, program funders or policy makers might want to use the evaluation to make decisions about funding or scaling up a program; so they would want to know whether the program is achieving its intended outcomes - does it work? - or whether it represents value for money. Each of these different evaluation purposes might require a different type of evaluation because each will ask different questions and use different types of data. This resource has more information on different types of evaluation.

In order to make the most of an evaluation, you can also think about additional ways the evaluation results could be used, for example, advocacy or practice improvement, or who the wider audience might be.

What do I need to find out?

What do I need to find out?

Identifying evaluation questions

Evaluation questions are high-level questions that guide an evaluation. They outline exactly what it is you hope to learn through the evaluation. As stated above, different audiences for the evaluation can have different questions they want the evaluation to answer. For example, a senior leader in your organisation might want to know whether a program represents value for money, and a funder might want to know about the outcomes for children and families who have used a service. Evaluation questions are different to survey questions or to the kinds of questions you might ask in an interview; they are higher-level questions that will guide decisions about design and methods, and inform the development of survey or interview questions. See this short article on developing evaluation questions for more information. Figure 2, below, shows how to use a program logic to guide the development of evaluation questions.

If you are doing a simple outcome evaluation, your evaluation question may be something like: 'Was the program successful at meeting its outcomes?' You might then have sub-questions that specify which particular outcomes you intend to evaluate. You might also want to consider including a question about 'unintended impacts'. Unintended impacts are things that happened because of your program that you didn't anticipate; they could be positive or negative.

Deciding on evaluation design

Different evaluation designs serve different purposes and can answer different types of evaluation questions. For example, to measure whether a program achieved its outcomes, you might use 'pre- or post-testing' or a 'comparison' or 'control group'. This resource goes into more detail about different evaluation designs. An evaluation does not need to be technical or expensive to answer your evaluation questions.

Ultimately, the most appropriate evaluation design is one that is in line with the purpose of the evaluation, the program being evaluated and the availability of resources. Some factors to consider are:

- the type of program or project you are seeking to evaluate

- the questions you want to answer

- your target group

- the purpose of your evaluation

- your resources

- whether you will conduct an evaluation internally or contract an evaluator.

What will I measure?

What will I measure?

You have identified your evaluation questions but what do you need to measure to be able to answer those questions?

Outcomes and outputs

Outcomes are the changes that are experienced by children and families as a result of your program or service. Increases in skills or knowledge, improved relationships, or changes in attitudes or confidence are outcomes that you might be trying to achieve. Outputs are the things that you do to achieve your outcomes. Things such as delivering counselling sessions, facilitating playgroup activities or running parent education evenings. If you have already identified the short, medium and long term outcomes in your program logic, this makes planning an outcome evaluation much easier because you will have outlined what you need to measure, and you may have already specified the time frames in which you expect those outcomes to occur. This video discusses selecting outcomes for evaluation. You can also use your program logic to identify outputs to measure for a process evaluation.

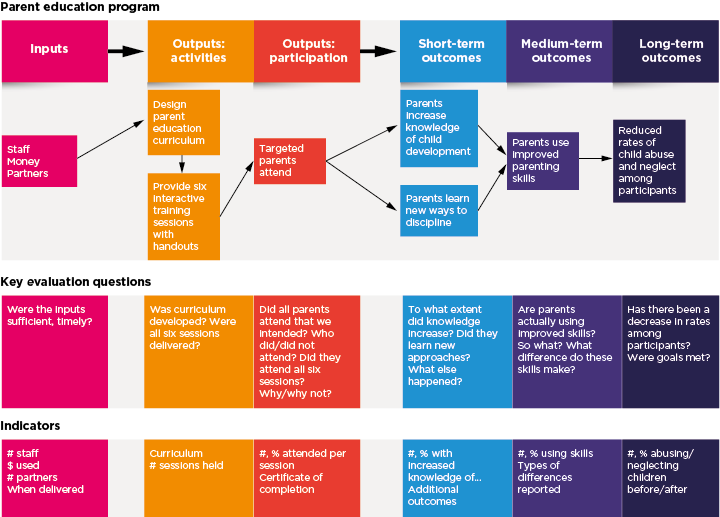

Figure 2 demonstrates how a program logic can be used to develop key evaluation questions and indicators.

Figure 2: Developing key evaluation questions and indicators

Source: Taylor-Powell, E., Jones, L., & Henert, E. (2003) p. 181

Although you may have identified multiple outcomes for your program in your program logic, evaluation requires time and resources and it may be more realistic to evaluate a few of your outcomes rather than all of them. You might also need to define some of your outcomes more narrowly. For example, if one of your outcomes is ensuring more children meet developmental milestones, you could narrow this to a specific aspect of child development (e.g. social development) that can be measured more easily through pre- and post-testing.

When selecting which outcomes to measure, there are a number of factors to take into consideration. The following questions, adapted from the Ontario Centre for Excellence in Child and Youth Mental Health (2013), will help you to make these decisions:

- Is this outcome important to your stakeholders? Different outcomes may have different levels of importance to different stakeholders. It will be important to arrive at some consensus.

- Is this outcome within your sphere of influence? If a program works to build parenting skills but also provides some referrals to an employment program, measuring changes to parental employment would not be a priority as these are outside of the influence of the program.

- Is this a core outcome to your program? A program may have a range of outcomes but you should aim to measure those which are directly related to your goal and objectives.

- Will the program be at the right stage of delivery to produce the particular outcome? Ensure that the outcomes are achievable within the timelines of the evaluation. For example, it may not be appropriate to measure a long-term outcome immediately after the end of the program.

- Will you be able to measure the outcome? There are many standardised measures with strong validity and reliability that are designed to measure specific outcomes (see the Outcomes measurement matrix). The challenge is to ensure that the selected measure is appropriate for and easy to undertake with the target population (e.g. not too time consuming or complex).

- Will measuring this outcome give you useful information about whether the program is effective or not? Evaluation findings should help you to make decisions about the program, so if measuring an outcome gives you interesting, but not useful, information, it is probably not a priority. For example, if your program is designed to improve parenting skills, measuring changes in physical activity will not tell you whether or not your program is effective.

Selecting indicators

An indicator is the thing that you need to measure or observe to see if you have achieved your outcomes. Selecting your indicators will help you know what data to collect.

Outcomes and indicators are sometimes confused. Outcomes, as outlined above, are the positive changes that occur for children, families and communities as a result of participating in your program. The indicators are the markers or signs that show you if change has occurred, what has changed and by how much (Ontario Centre of Excellence for Child and Youth Mental Health, 2013). They need to be specific and measurable. For example, if your proposed outcome is increased literacy among school-aged children in your program, then you might use changes in their NAPLAN scores as an indicator of whether you have achieved this outcome.

Some outcomes and evaluation questions are best measured by more than one indicator. For example, some indicators of increased parental involvement in school could be increased attendance at school meetings; levels of participation in parent-school organisations; attendance at school functions; and calls made to the school (Taylor-Powell, Jones, & Henert, 2003). FRIENDS in the US have a menu of outcomes and possible indicators in the area of child abuse and neglect prevention that can give you some ideas for possible indicators.

How will I measure it?

How will I measure it?

Types of data

Data are the information you collect to answer your evaluation questions. Data can be quantitative (numbers) or qualitative (words). The type of data you want to collect is often determined by your evaluation questions, your evaluation design and your indicators. Many evaluations are 'mixed methods' evaluations where a combination of quantitative and qualitative data is collected.

Quantitative data will tell you how many, how much or how often something has occurred. Quantitative data are often expressed as percentages, ratios or rates. Quantitative data collection methods include outcomes measurement tools, surveys with rating scales, and observation methods that count how many times something happened.

Qualitative data will tell you why or how something happened and is useful for understanding attitudes, beliefs and behaviours. Qualitative data collection methods include interviews, focus groups, observation, and open-ended questions in a questionnaire. For more information about qualitative methods and how to ensure they are useful and of high quality, see this article from CFCA.

Before you decide on your data collection methods, you should also consider how the data will be analysed (see below).

Selecting data collection methods

Interviews, observation, surveys, analysis of administrative data, and focus groups are common data collection methods used in the evaluation of community services and programs. These methods are discussed in greater detail in this basics of evaluation resource. There are many considerations when selecting your data collection methods, including your evaluation questions and your evaluation design, the participants you work with and your resource limitations. The points below outline some of these considerations.

- The needs of the target group: For example, a written survey may not be suitable for groups with English as an additional language or low literacy. It is best to check with the target group about what methods they prefer.

- Cultural appropriateness: If you are working with Aboriginal and Torres Strait Islander people, or people from culturally and linguistically diverse (CALD) backgrounds, make sure your evaluation methods and the tools you are using are relevant and appropriate. The best way to do this is through partnering with Aboriginal and Torres Strait Islander people (or members of CALD communities) to design and conduct the evaluation. At a minimum, you should discuss the evaluation with participants and pilot any tools or questionnaires that you will use. See the AIFS resource on evaluation with Indigenous families and communities for more information.

- Evaluation question/s: The purpose of the evaluation and the questions you are trying to answer will be better addressed by some data collection methods than others. For example, questions about how participants experienced a program might be best answered by an interview or focus group, whereas questions about how much change occurred might be best answered by a survey.

- Timing: Consider the time that you and your team have to collect and analyse the data, and also the amount of time your participants will have to participate in evaluation.

- Evaluation capacity within your team: Evaluation takes time and resources and requires skills and/or training. Developing surveys or observation checklists, conducting interviews and analysing data are all specialist skills. If you don't have these skills, you may wish to undertake some training or contract an external evaluator.

- Access to tools: Developing data collection tools (such as your own survey or observation checklist) that collect good quality data is difficult. Using validated outcomes measurement tools is often preferable, but these may not be suitable for your group or may need to be purchased. For more information see this article on how to select an outcomes measurement tool and this resource to assist you to find an appropriate outcomes measurement tool.

- Practicality: There is no point collecting lots of valuable data if you do not have the time or skills to analyse them. In fact, this would be unethical (see ethics below) because it would be an unnecessary invasion of your participants' privacy and a waste of their time. You need to make sure that the amount of data you're collecting, and the methods you're using to collect it, are proportionate to the requirements of the evaluation and the needs of the target group.

Who will I collect data from?

Who will I collect data from?

Choosing who to include in your evaluation is called 'sampling'. Generally, most research and evaluation takes a sample from the 'population'. The population is the total number of potential evaluation participants - if you are evaluating a service, the population for your evaluation would be everyone that has used the service. For small programs, you might sample all participants in the program. If you are not including all participants in your evaluation, then who you include and who you don't can affect the results of your evaluation, and this is called 'sampling bias'. For example:

- If you collect data from people who finished your program but only 30 out of 100 people finished, you are missing important information from people who didn't finish the program. Your results might show that the program is effective for 100% of people, when, in fact, you don't know about the other 70 people.

- Similarly, if you provide a written survey to people doing your program but there are a number of people with poor literacy, or with English as an additional language, they may not complete it. Then your evaluation may give an incomplete picture of the program. It only tells you whether it was effective for people with English literacy skills.

The number of people you include in your evaluation is called the 'sample size'. The best samples are those that are representative of the population. That is, the people who make up the sample share similar characteristics to the population in a similar proportion, so the people in the sample and the people in the population are similar in terms of gender, age, cultural and linguistic backgrounds and life experiences (e.g. unemployment or number of children). Generally, the bigger the sample size, the more confident you can be about the results of your evaluation. Of course, you need to work within the resources that you have and it may not be practical to sample everyone. The Research Methods Knowledge Base has more information on sampling.

You might not need all the clients in your program or service to participate in the evaluation. But you should think about who is included and who is not included and how this could bias the results. You will need to provide information about your sample when you present the findings of your evaluation, so take notes on this as you go.

For information and advice on collecting data from parents and children, particularly those who live in disadvantaged areas, see this resource from AIFS.

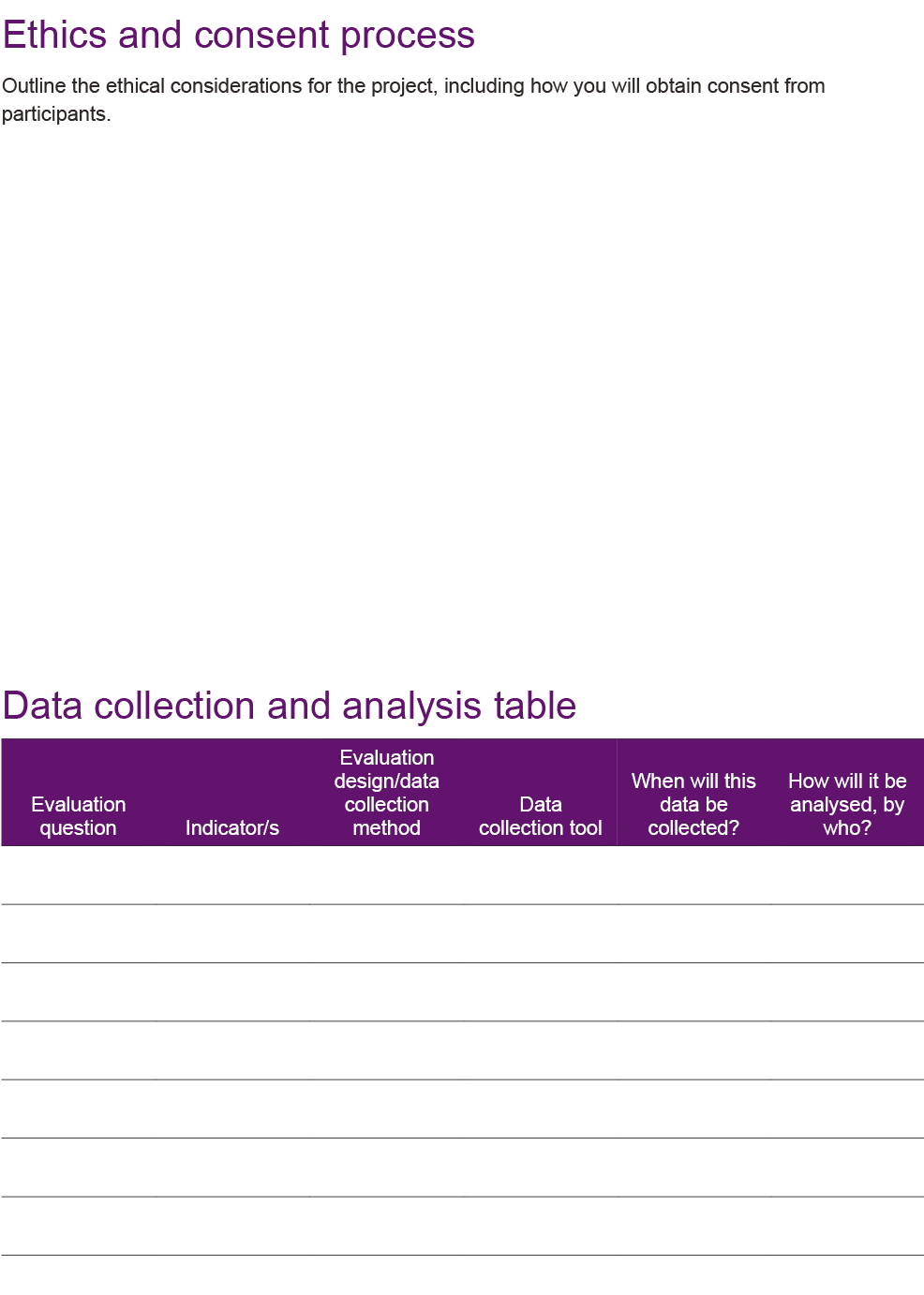

Ethics

All research and evaluation must be conducted in line with ethical principles. Some of these principles (e.g. privacy) are likely already covered by organisational policy but may need to be reviewed to ensure they meet the needs of evaluation. There are also other considerations that are more specific to research and evaluation. Large evaluation projects, particularly those being conducted by universities, may need to go through an ethical clearance process via a Human Research Ethics Committee. More information on this process is available in the resource Demystifying ethical review and in our collection of resources on research and evaluation ethics (see '5.3 Ethical considerations for evaluation projects').

Although smaller evaluations, especially those conducted internally by community organisations, are less likely to go through this process, it is important to think through the ethical considerations of your evaluation. In particular, consider how people will consent to participate, how you can ensure that their participation is voluntary, and how you will protect their privacy through de-identification of data.

To ensure voluntary participation, participants need to be fully informed about how the evaluation will be done, what topics it will cover, potential risks or harms, potential benefits, how their information will be used and how their privacy and confidentiality will be protected. You must also make clear to people that if they choose not to participate in the evaluation, this will not compromise their ability to use your service now or in the future. Written consent forms are the most common way of getting consent but this may not be suitable for all participants. Other ways that people can express consent are outlined in the National Health and Medical Research guidelines. For children to participate, parental consent is nearly always required. More information on ethical research with children is available in this short article.

You should also think about the possible risks for participants. Is there any possibility that people could be caused harm, discomfort or inconvenience by participating in this evaluation? For practitioners working with children and families, there could be the potential for harm or discomfort if the questions asked in an interview or survey are personal - questions about parenting and stress could trigger participant distress if the parent is having a difficult time. Think about how the potential for harm or discomfort could be minimised or avoided. For example, only ask questions that are relevant for your evaluation, and ensure that the interviews are undertaken in a private space. Experienced staff could check in with parents and provide contact information for services or counsellors that people can be referred to if required.

There are additional considerations when conducting research and evaluation with Aboriginal and Torres Strait Islander people, for more information see CFCA's resource on evaluating the outcomes of programs for Indigenous families and communities. The Australian Institute of Aboriginal and Torres Strait Islander Studies have produced Guidelines for Ethical Research in Australian Indigenous Studies.

When will I collect data?

When will I collect data?

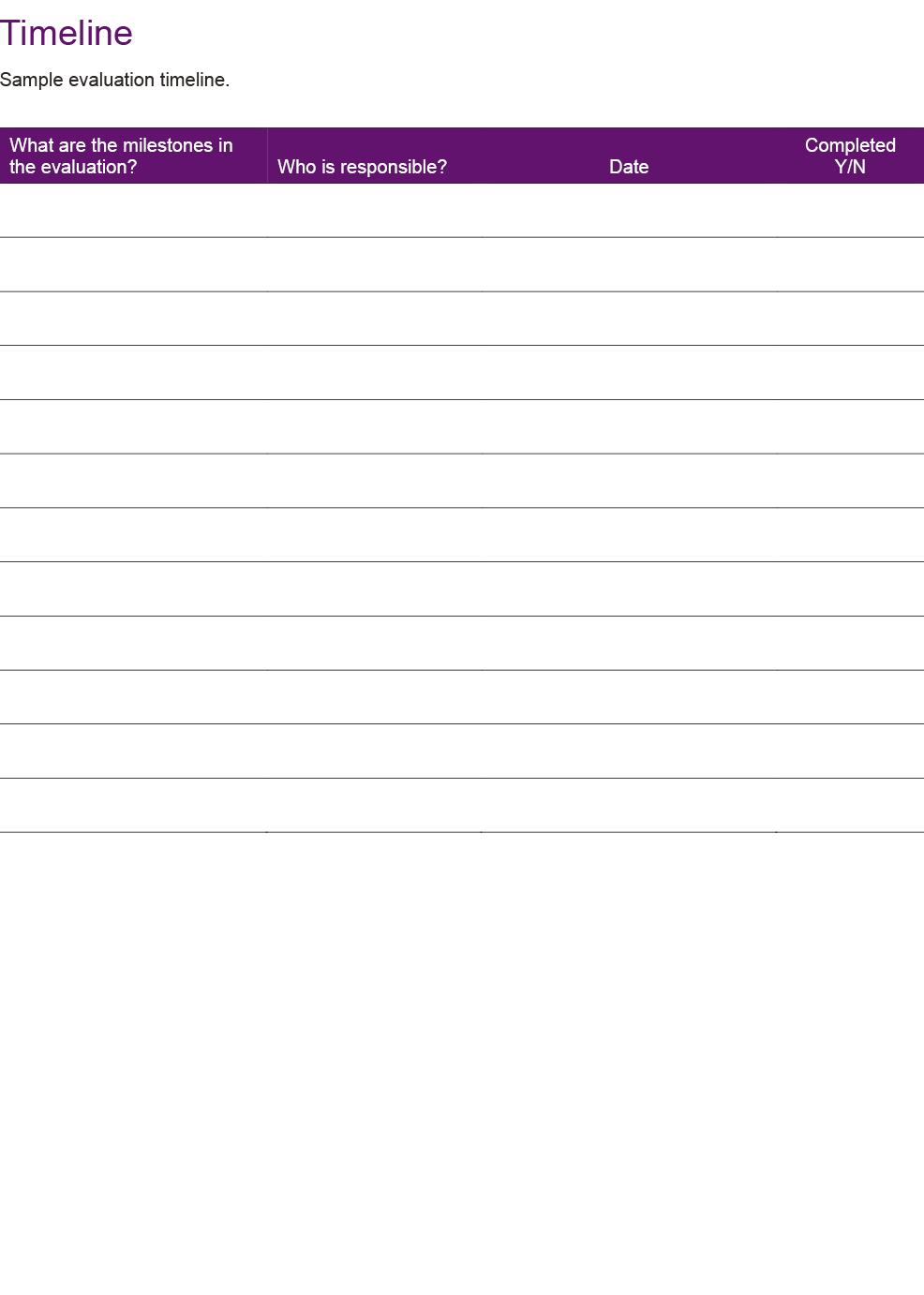

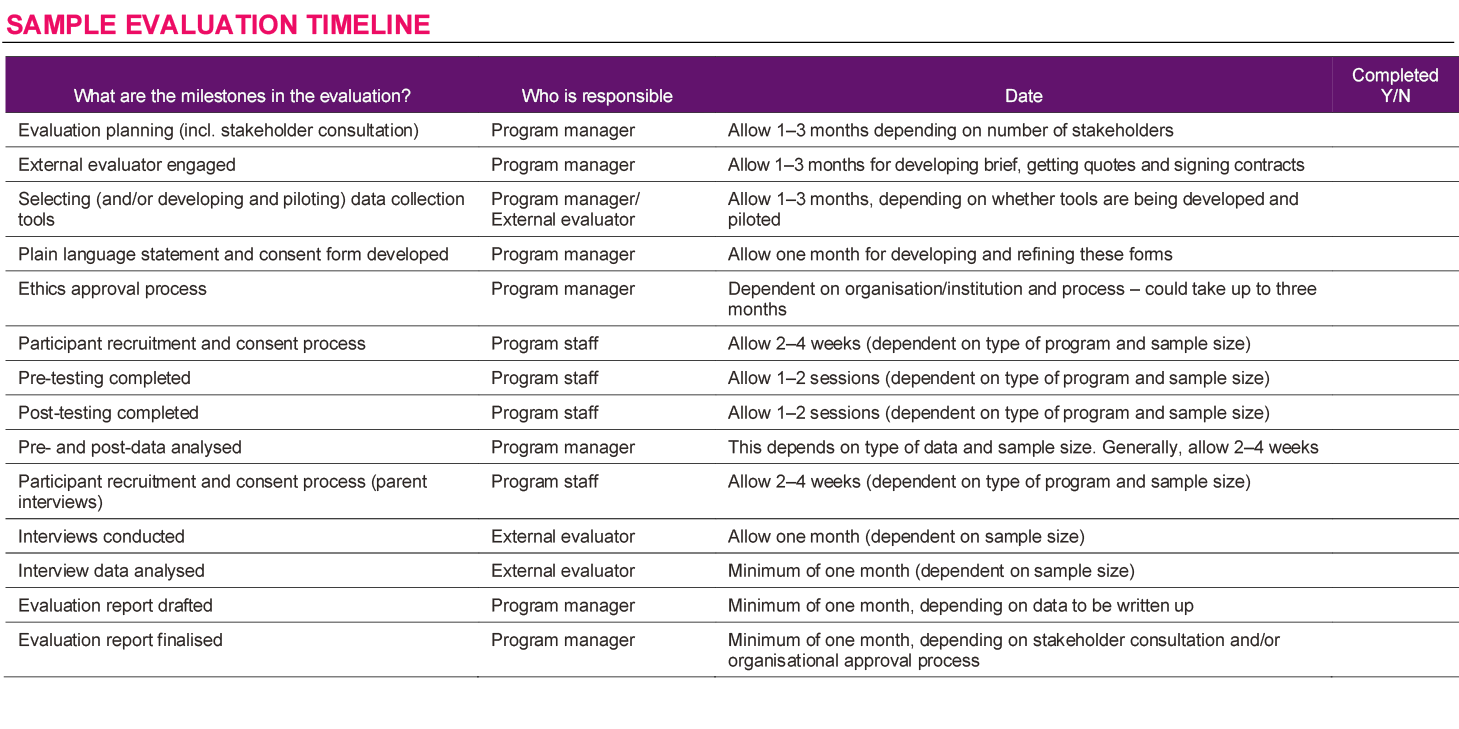

It can take several months to plan your evaluation, design or source your tools, recruit participants, collect and analyse data and report your findings. We have developed an evaluation timeline that you can use to plan and monitor your evaluation to keep it on track. There is a blank template and a completed example template available in Appendices B and C.

Timing data collection

When planning the timing of your data collection, there are a few things to take into consideration:

- Allow enough time for outcomes to be realised. There's no point measuring changes in behaviour after a single session of a program - it takes time for people to apply new skills and knowledge.

- You want to ensure that the timing of data collection fits in with the program activities. For example, pre-testing could be done as part of an induction with families prior to the first session, and you might need to have a longer final session in the program to accommodate data collection. Alternatively, you might need a session or two with families to build rapport before you ask them to complete a pre-testing survey. Make sure that data collection is factored into your session plans and that you have enough staff to support data collection.

- Think about following-up with families after they have left a program. This can provide information about whether any change in outcomes has been sustained.

What do I do with the data?

What do I do with the data?

Data analysis

You need to ensure you have the time, skills and resources to analyse the data you collect. Programs such as SPSS (for quantitative data) or NVIVO (for qualitative data) can support analysis but skills and knowledge in quantitative or qualitative data analysis are still required. The volume of data that you have, and the questions you are addressing through your analysis, will also determine the type of analysis that needs to be done. For a good discussion of data analysis and the steps to data analysis and synthesis see the World Health Organization's Evaluation Practice Handbook, page 54.

Writing up the evaluation

Writing up the evaluation and sharing your findings is a really important step in an evaluation. Pulling the findings together and discussing them is the 'evaluation' part of an evaluation, so it is essential to allow adequate time and resources for this step. You might need to prepare different products for different stakeholders (e.g. a plain English summary of findings for participants, and a presentation for program staff) but it is likely that you will need to produce an evaluation report.

An evaluation report should include:

- the social issue or need addressed by the program

- the purpose and objectives of the program

- a clear description of how the program is organised and its activities

- the methodology - how the evaluation was conducted and an explanation of why it was done this way. This should include what surveys or interview questions were used and when and how they were delivered (and a copy should be included in the appendix)

- sampling - how many people participated in the evaluation, who they were and how they were recruited

- data analysis - a description of how data were analysed

- ethics - a description of how consent was obtained and how ethical obligations to participants were met

- findings - what was learnt from the evaluation (and what it means for the program) and how do the results compare with your objectives and outcomes

- recommendations - detailed and actionable suggestions for possible changes to the program or service that have come from the findings

- any limitations to the evaluation and how future evaluations will overcome these limitations

The World Health Organization provide a sample evaluation report structure in their Evaluation Practice Handbook, page 61.

Using and sharing your evaluation findings

There are many different reasons to undertake an evaluation, and many different ways an evaluation can be used. You might have undertaken an evaluation to measure how effective your program is so that you can be accountable to participants and funders. Alternatively, you might have completed an evaluation to understand whether the program is being implemented as intended or to gather information for continuous improvement. The purpose of your evaluation and the evaluation questions you selected to guide the evaluation will determine how you use your findings. If your evaluation report includes recommendations, it can be a good idea to get together with key stakeholders and develop a plan for how the recommendations will be implemented.

In addition to using your evaluation within your organisation, there are many benefits to sharing evaluation findings with different audiences (often called dissemination). Sharing the findings of your evaluation can provide valuable information for other services that may be implementing similar programs or working with similar groups of people. It is also important to report back on your evaluation findings to the people who participated in the evaluation.

The way you present and share information should be appropriate to the audience. The ways you share findings, and who you share them with, should be connected to the evaluation purpose/s and audiences that you identified when the evaluation was in the planning phase. For example, your funders might want an evaluation report, but this is unlikely to be read by service users who participated in the evaluation; a short summary or verbal discussion might be more appropriate for them. To reach a wider audience and contribute to the evidence base, you can present evaluation findings at conferences or in academic journals. The CFCA practice resource Dissemination of Evaluation Findings discusses ways of writing an evaluation report and sharing the findings.

Further reading

- AIFS Evidence and Evaluation Support, Program planning and evaluation guide

- Better Evaluation, online resources to support evaluation

- Centre for Social Impact, Guide to social impact measurement

- Ontario Centre for Child and Youth Mental Health, evaluation learning modules

- World Health Organization, Evaluation practice handbook

References

- FRIENDS. (2017). Evaluation planning. Chapel Hill, NC: FRIENDS National Center for Community-Based Child Abuse Prevention. Retrieved from 23.253.242.12/evaluation-toolkit/evaluation-planning.

- Ontario Centre of Excellence for Child and Youth Mental Health. (2013). Program evaluation toolkit. Ottawa, ON: Ontario Centre of Excellence for Child and Youth Mental Health. Retrieved from www.excellenceforchildandyouth.ca/sites/default/files/docs/program-evaluation-toolkit.pdf

- Taylor-Powell, E., Jones, L., & Henert, E. (2003). Enhancing program performance with logic models. Madison, WI: University of Wisconsin-Extention. Retrieved from www.uwex.edu/ces/lmcourse.

- World Health Organization (WHO). (2013). Evaluation practice handbook. Geneva: WHO.

Appendix A and B can be downloaded separately:

Appendix A [Word, 128KB]

Appendix B [Word, 123KB]

Appendix C

This resource was authored by Jessica Smart, Senior Research Officer at the Australian Institute of Family Studies.

Thank-you to the stakeholders who participated in consultation to help us develop this resource.

This document has been produced as part of AIFS Evidence and Evaluation Support funded by the Australian Government through the Department of Social Services.

Featured image: © Gettyimages/julief514